New Graphics Processing Techniques

As well as the new card, Nvidia was keen to share with us details of three new graphics processing techniques, each with their own catchy acronyms: Voxel Global Illumination (VXGI), Dynamic Super Resolution (DSR) and Multi-Frame Sampled Anti-Aliasing (MFAA).Voxel Global Illumination (VXGI)

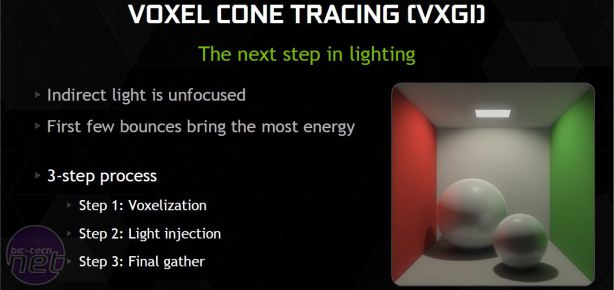

VXGI is a real-time global illumination engine developed from a technique first revealed in 2011 by Nvidia engineer Cyril Crassin (see the paper on it here and a video on it here) that uses voxel grids and cone tracing to calculate an approximation of global illumination, which is essentially the tracing of all direct light (that from a light source) and indirect light (that which has previously bounced off or passed through one or more objects) in a scene at any given time. Nvidia considers it to be the next big leap forward for lighting, and a small step closer to real-time ray-tracing.Modelling lighting is the most computationally difficult problem in graphics. Indirect light in particular is very costly and complex, as you have to consider the material properties of every object in the scene to render it accurately, and this becomes even harder to manage with geometry that is dynamic rather than fixed. Current global illumination techniques typically rely on pre-calculated lighting and carefully placed artwork, neither of which are particularly well suited to animated or moving objects. VXGI aims to make the process a real-time one that is compatible with and suitable for dynamic geometry and lights for more realistic lighting.

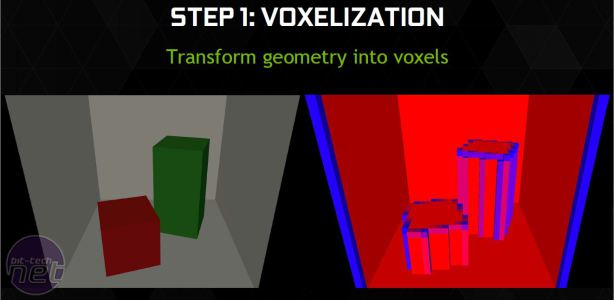

First is the process of voxelisation, which essentially turns a 2D scene into a series of 3D cubes called voxels. It is then determined for each voxel whether it is empty or filled with an object, and if it's the latter then the percentage of coverage is also calculated.

With the coverage stage complete, it's time to add lighting information. Each object in the scene will have information about how it responds to light – its colour, opacity, reflectiveness and so on, and this information is stored in any voxel with which an object interacts. The scene is then rendered multiple times from the perspective of the light source(s) in the scene, which tells you the amount of light that hits each voxel.

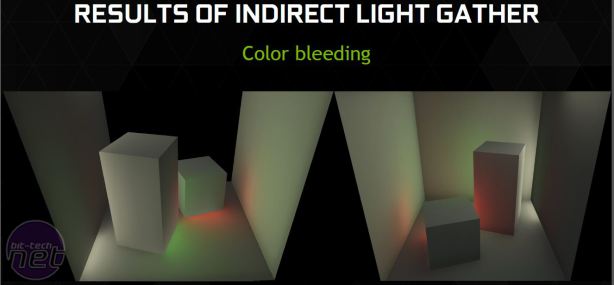

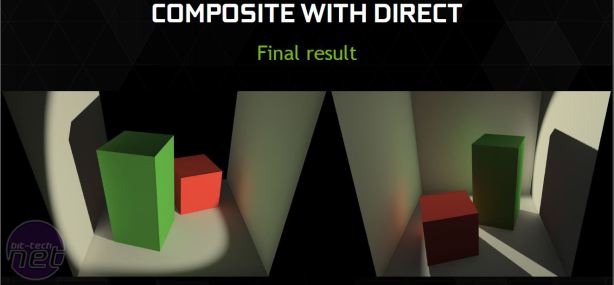

Using the stored information about where objects are and how the objects respond to light, it's then possible to make calculations about the colour, direction and intensity of any light that is reflected from any object. Essentially, you're left with a voxel data structure that represents the lighting information in a scene at a given time.

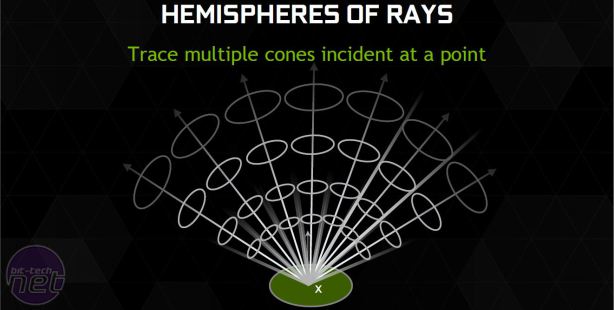

The final step is of course to rasterise the scene for display on a 2D screen, with voxel data structure being one that's very powerful for accurate lighting calculations. Indirect lighting is done through cone tracing, which is an approximation of ray tracing (whereby individual rays are traced through a scene). As you can imagine, ray tracing is hugely expensive and far out of the realm of real-time calculations for now, but cone tracing reduces thousands of individual rays into voxel cones which are then traced through the voxel grid to produce indirect lighting effects. Cones can have their properties altered to produce different lighting effects too, and it can all be done in real-time, bringing a heightened level of realism to the light of moving objects.

VXGI will be available in Unreal Engine 4, as well as other major engines, from Q4 of this year. Its software algorithm will run on all GPUs, and it is also scalable across less powerful processors such as those in consoles (e.g. by lowering the density of the voxel grid) but it will be accelerated on GM2xx Maxwell parts thanks to their native support for Viewport Multicast and Conservative Raster, both of which speed up the voxelisation process.

Dynamic Super Resolution (DSR)

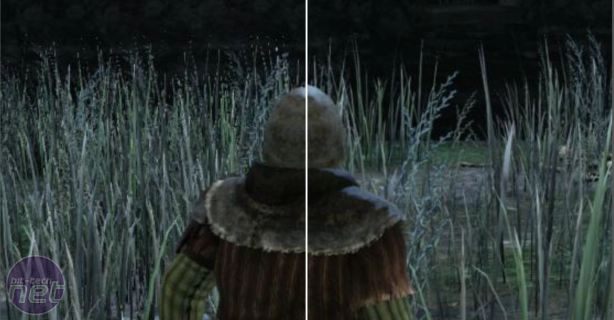

Next up is Dynamic Super Resolution, or DSR. This is a feature being introduced with this generation of Maxwell products, but not one that will remain Maxwell-exclusive. It is essentially the process of rendering a game internally at a higher resolution than your display's native one, and then downscaling it to match your display resolution. The benefit is a better looking final image that has less aliasing artifacts, though it of course comes with a performance hit – it is for less demanding games where even at full settings you have available graphics headroom but lack a display with a high enough resolution to take full advantage of it.Similar results can be obtained using methods that are already available, but they are nearly always fiddling to set-up and may very well come with a host of incompatibility problems in certain games. With DSR, it's fully integrated into the driver; for novice users, it can be quickly enabled or disabled in GeForce Experience. To ensure a final image that is as smooth and jaggy-free as possible, Nvidia applies a 13-tap Gaussian blur when downscaling.

Multi-Frame Sampled Anti-Aliasing (MFAA)

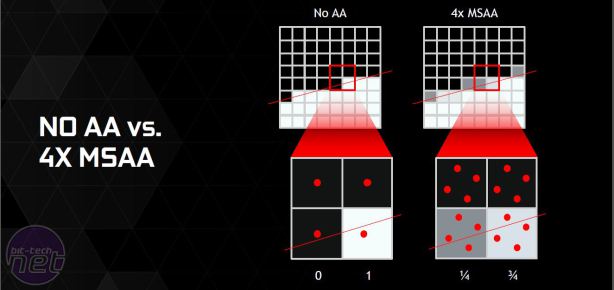

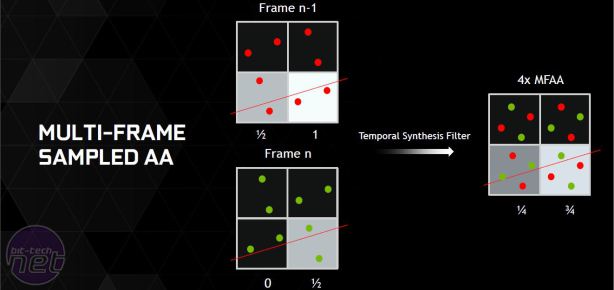

The final technique is MFAA, Multi-Frame Sampled Anti-Aliasing, designed for situations opposite to DSR, i.e. where you need more frames per second but don't want to sacrifice image quality. This technique is exclusive to GM2xx GPUs, as it relies on support for a technique called multi-pixel programmable sampling. Standard MSAA uses fixed sample patterns – 4x MSAA, for example, will sample a pixel through which an edge passes at four fixed locations to calculate its colour, and these locations remain fixed from frame to frame.Multi-pixel programmable sampling, however, allows developers to program custom pixel sample positions which can vary from frame to frame or even within a single frame, and there are many positions to choose from: 256 in a standard 16x16 pixel grid. This technique can be used to generate new AA methods, and MFAA is one that Nvidia has made itself. Like DSR, it will be implemented at the driver level and enabled and disabled with a control panel setting. It is not available in the launch driver, but will be coming soon with support for a wide range of exisiting games.

The aim of MFAA is to produce an image that is almost identical to a certain level of MSAA, but at the performance hit of the MSAA level below (e.g. 4x MSAA image quality at a 2x MSAA performance level). Essentially, it uses a 2x MSAA sample pattern, but varies the position of the sample from frame to frame. It then combines the sampled data of the current frame with that of the previous frame to produce double the amount of sample positions than it is currently sampling, applying what Nvidia calls a Temporal Synthesis Filter to convincingly merge the data for the current frame. The result, Nvidia claims, is about 30 percent more performance with effectively the same image. Of course, such claims remain to be seen.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.